|

I am a Member of Technical Staff at xAI working on Pretraining and Multimodal. Previously, I graduated from UC Berkeley with a Bachelor's in Computer Science where I was advised by Prof. Jitendra Malik at BAIR. Email / LinkedIn / Github / Google Scholar |

|

My |

|

Rahul Ravishankar*, Zeeshan Patel*, Jathushan Rajasegaran, Jitendra Malik CVPR 2025 project page / arXiv / code We show how diffusion models benefit from scaling training and test-time compute for perceptual tasks and unify tasks such as depth estimation, optical flow, and amodal segmentation under the framework of image-to-image translation. |

|

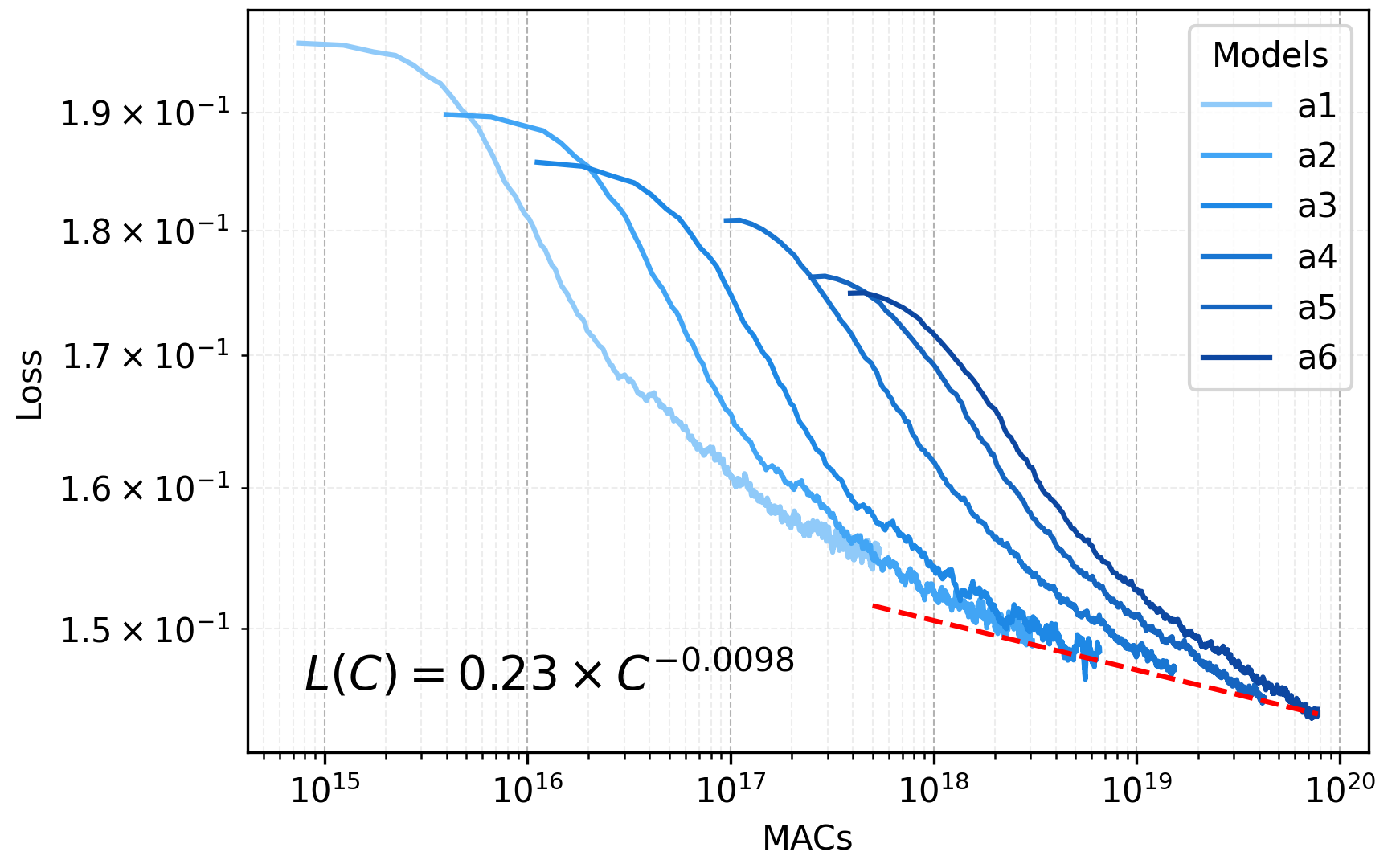

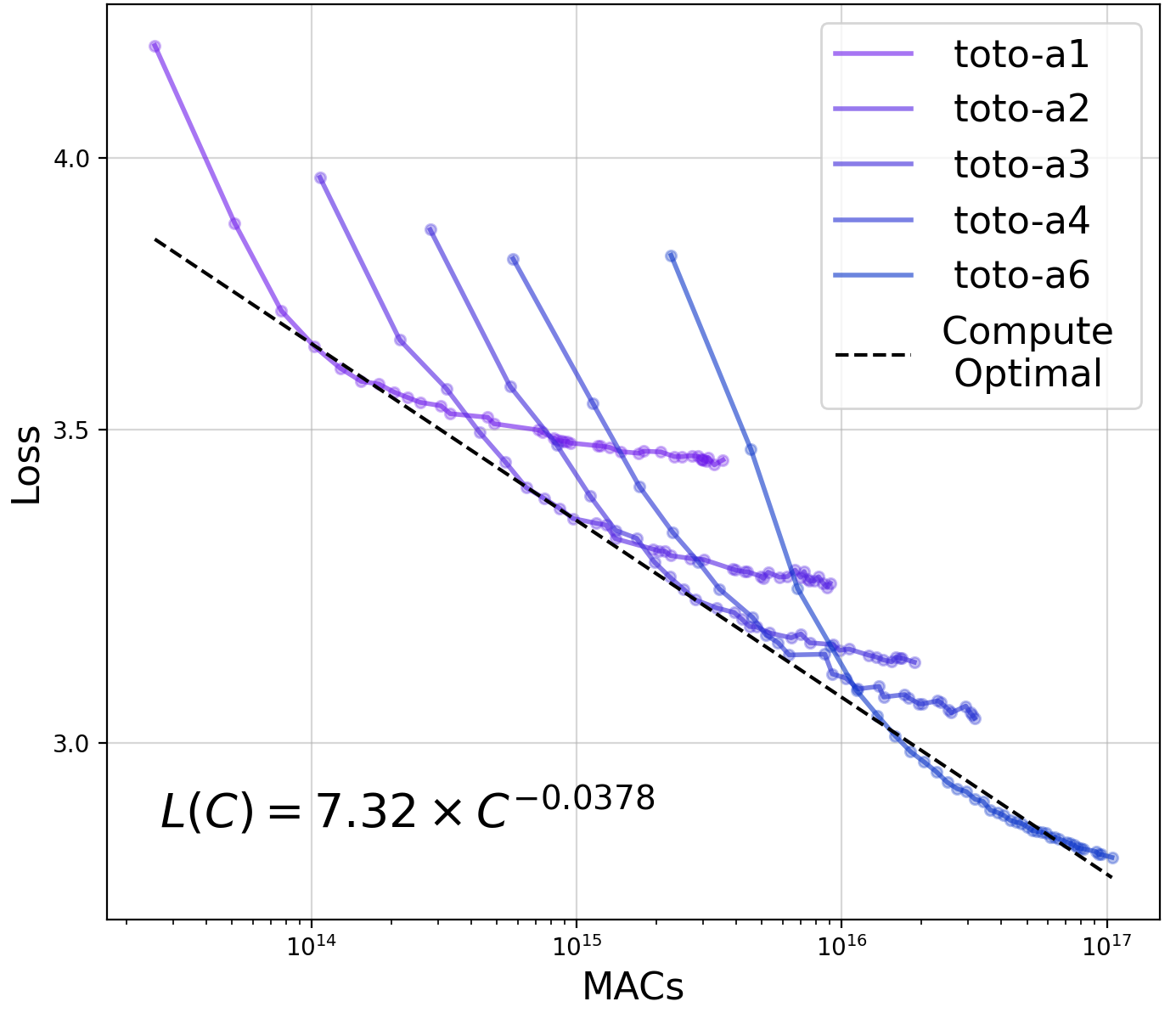

Jathushan Rajasegaran, Ilija Radosavovic, Rahul Ravishankar, Yossi Gandelsman, Christoph Feichtenhofer, Jitendra Malik ICCV 2025 project page / arXiv / code [coming soon] We trained LLaMA models up to 1 billion parameters on 1 trillion visual tokens. The resulting model can do diverse tasks including image and video recognition, video tracking, action prediction, and robotics. We also study the scaling properties of these family of models. |

|